A Benchmark for 3D Mesh Segmentation

Overview

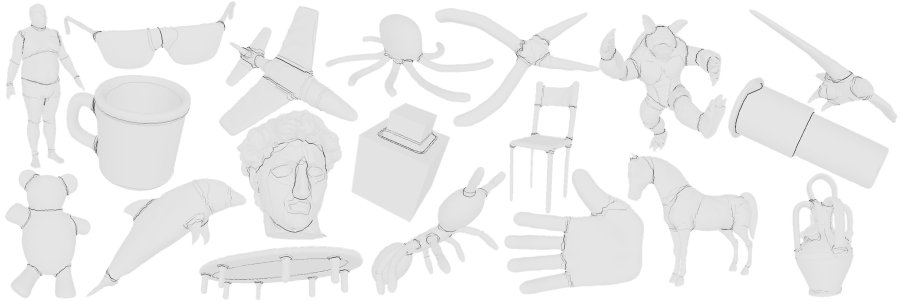

This mesh segmentation benchmark provides data for quantitative analysis of how people decompose objects into parts and for comparison of automatic mesh segmentation algorithms. To build the benchmark, we recruited eighty people to manually segment surface meshes into functional parts, yielding an average of 11 human-generated segmentations for each of 380 meshes across 19 object categories (shown in the figure above). This data set provides a sampled distribution over ''how humans decompose each mesh into functional parts,'' which we treat as a probabilistic ''ground truth'' (darker lines in the image above show places where more people placed a segmentation boundary). Given this data set, it is possible to analyze properties of the human-generated segmentations to learn about what they have in common with each other (and with computer-generated segmentations) and to compute evaluation metrics that measure how well the human-generated segmentations match computer-generated ones for the same mesh.

Data Set

The benchmark is based on a set of polygonal models generously provided by Daniela Giorgi (IMATI-CNR), within the scope of the AIM@SHAPE and FOCUS K3D projects, and other curators of the Watertight Models Track of SHREC 2007 (note the license). For each of those models, we include a set of manual segmentations created by people from around the world and a set of automatic segmentations created by several different algorithms.

Software

In addition to the data set, we provide software for evaluation, analysis, and viewing of mesh segmentations. The code is written in C++, is free to use, and is known to compile with a recent (4.x) version of g++ on 32/64 bit Linux as well as with VisualStudio 2005 in Windows, given that OpenGL is installed. Here is a list of executables and their usage.

We also provide Python scripts (compatible with 2.x but not 3.x versions) to automatically run evaluation and analysis experiments, to plot the results (using Matlab), to create reports, as well as to generate colored images of mesh segmentations. Our intention is that you can use this software for studies and comparisons of your own segmentation algorithms. Please refer to the instructions included in the software download for details.

Results

The benchmark has been used to compare segmentations computed by the following algorithms:

- K-Means [Shalfman et al. 2002, paper]

- Random Walks [Lai et al. 2008, paper]

- Fitting Primitives [Attene et. al 2006, paper, code]

- Normalized Cuts [Golovinskiy and Funkhouser 2008, paper]

- Randomized Cuts [Golovinskiy and Funkhouser 2008, paper]

- Core Extraction [Katz et al. 2005, paper]

- Shape Diameter Function [Shapira et al. 2008, paper, code]

For each of these algorithms, we have computed four metrics to evaluate how similar their automatically generated segmentations are to the ones created by people for the same models. The following links provide plots of those evaluation metrics for different slices of the data:

- Averaged over the whole benchmark

- Averaged over each category in the benchmark

- Averaged over the whole benchmark for different numSegments

We have also analyzed and compared the geometric properties of both manual and automatic segmentations for each of these algorithms. The following links provide plots of computed distributions for 11 different properties:

-

Number of Segments,

Segment Area,

Cut Perimeter,

Min Curvature, Max Curvature, Mean Curvature,

Gaussian Curvature, Curvature Derivative, Dihedral Angle,

Convexity, Compactness

Downloads

All data and software can be downloaded for free via the following links

- MeshsegBenchmark-1.0 (99MB) - manual segmentations, mesh models, and software in one zip file

- Meshes Only (82MB) - in OFF format

- Segmentations from Human Only (10MB)

- Source code and Scripts Only (9MB)

- Auxiliary Data (118MB)

- Segmentations from Algorithms Only (37MB)

- Curvature Info (81MB)

- Everything (216MB) - MeshsegBenchmark-1.0 + Auxiliary Data

Citation

If you use any part of this benchmark, please cite:

A Benchmark for 3D Mesh Segmentation

ACM Transactions on Graphics (Proc. SIGGRAPH), 28(3), 2009. [BibTex].

Feedback

Please send email to us if you have any questions