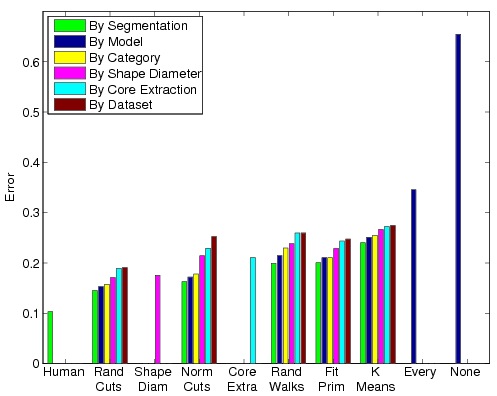

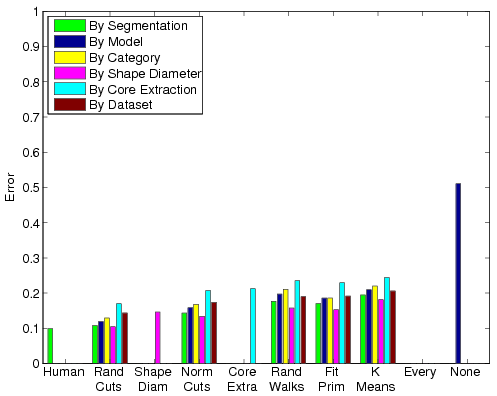

Rand Index

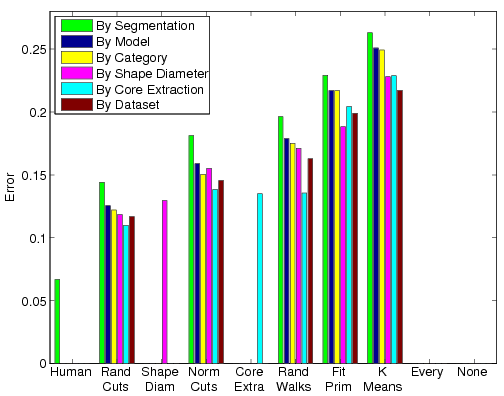

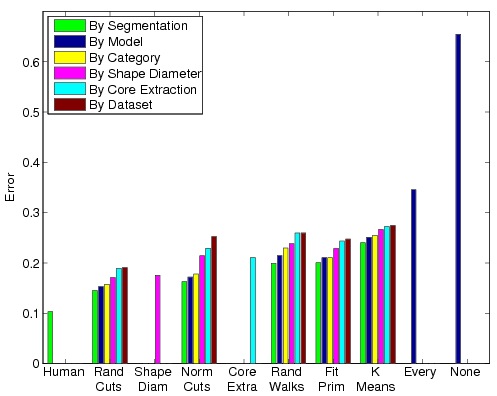

Cut Discrepancy

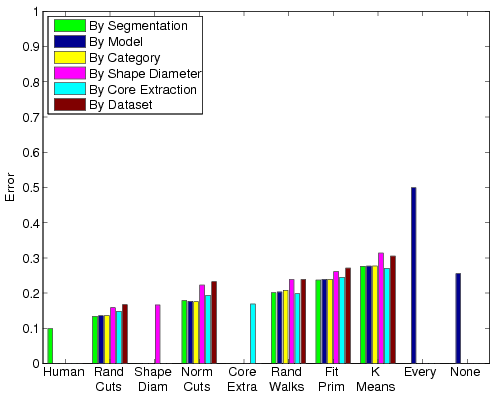

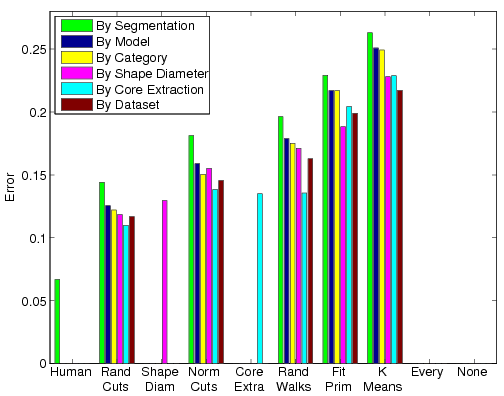

Global Consistency Error

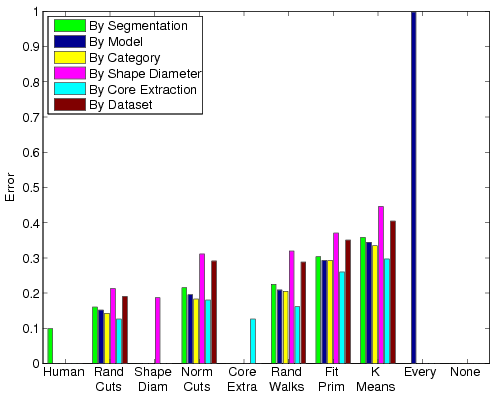

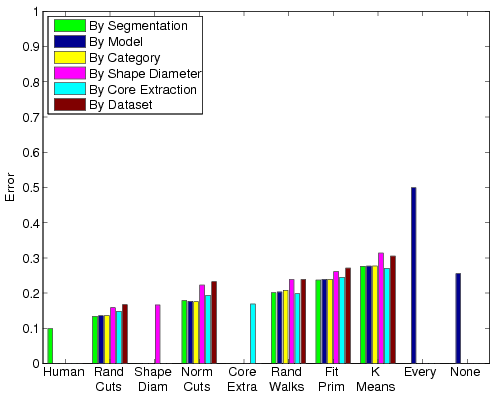

Local Consistency Error

Hamming Distance

Hamming Distance - Missing Rate

Hamming Distance - False Alarm Rate

Rand Index |

Cut Discrepancy |

Global Consistency Error |

Local Consistency Error |

Hamming Distance |

Hamming Distance - Missing Rate |

Hamming Distance - False Alarm Rate |